Concurrency and Parallelism

In this chapter, we will learn what is the difference between Concurrency and Parallelism in detail.

Welcome to the world of concurrent programming. As we delve into the concepts of concurrency and parallelism while implementing them in Go, you might encounter Go-specific features like WaitGroups and Channels before formally introducing them. Therefore, we recommend focusing on understanding the core concepts initially, trusting that everything will seamlessly come together as you progress.

Are you excited? Let's embark on this journey with Go!

Concurrency

Concurrency involves the simultaneous and independent execution of multiple processes. This approach prevents issues like race conditions or deadlocks when two or more threads share or access the same resource. With concurrency, independent calculations can be carried out in any sequence, yielding consistent results.

In single-core hardware, concurrency is achieved through context switching. On multicore hardware, concurrency is facilitated through parallelism.

Understanding Concurrency with an example

Let's take an example of booking a ticket for a movie.

function {

seat = 1

thread_one {

if(seat > 0) {

book_ticket()

seat--

}

}

thread_two {

if(seat > 0){

book_ticket()

seat--

}

}

}

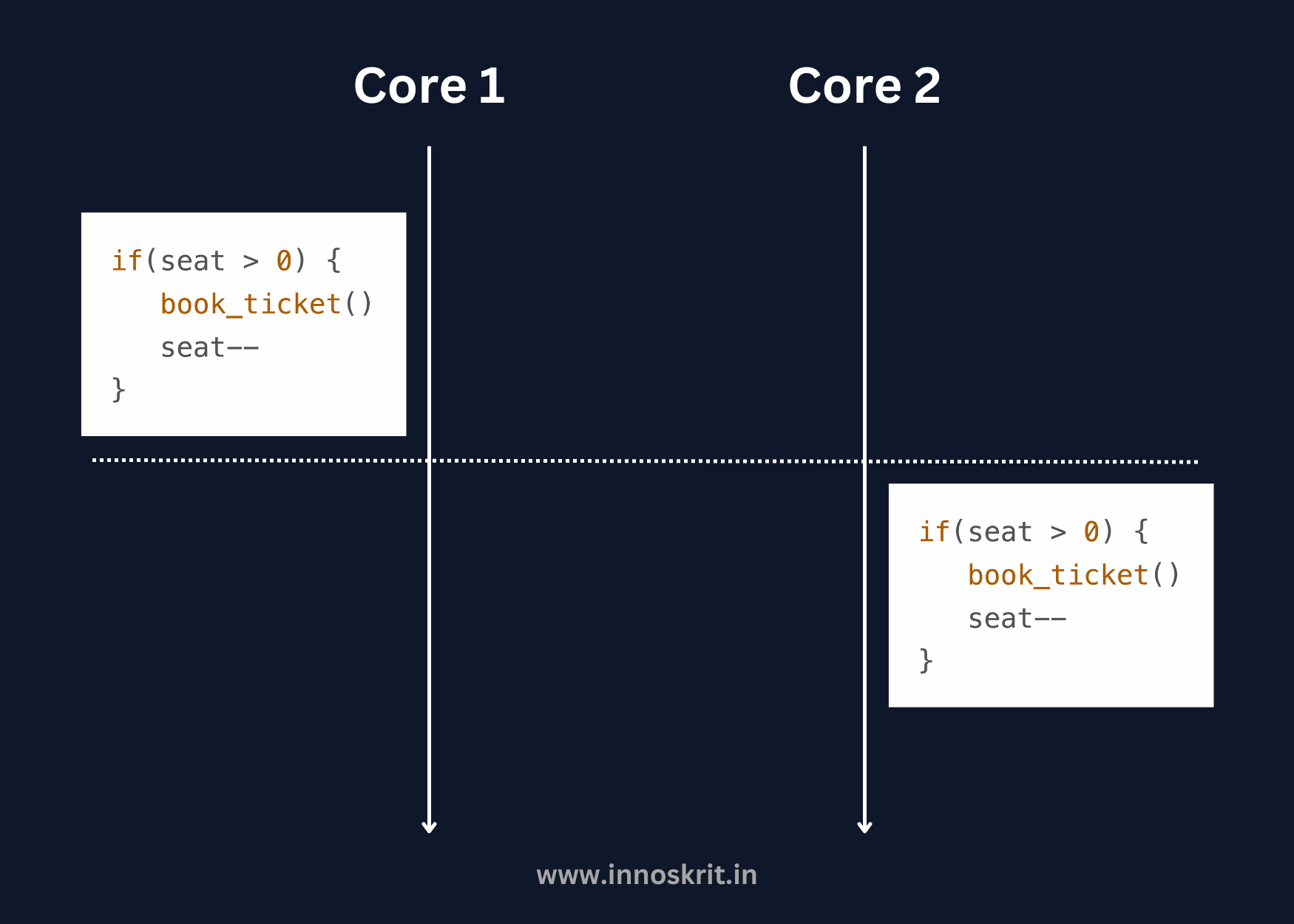

Let’s suppose we have a multicore processor. In our processor, thread_one is running on core 1, thread_two is running on core 2, and only one seat is available.

Let’s explore different possible scenarios.

In the first scenario, since both threads are executing independently on different cores, the thread_one executes completely and updates the available seats. The number of seats is zero, so the thread_two can’t book the ticket. It’s not guaranteed that the cores will execute in the same format every time.

Let’s take another scenario where the code executes in a different format.

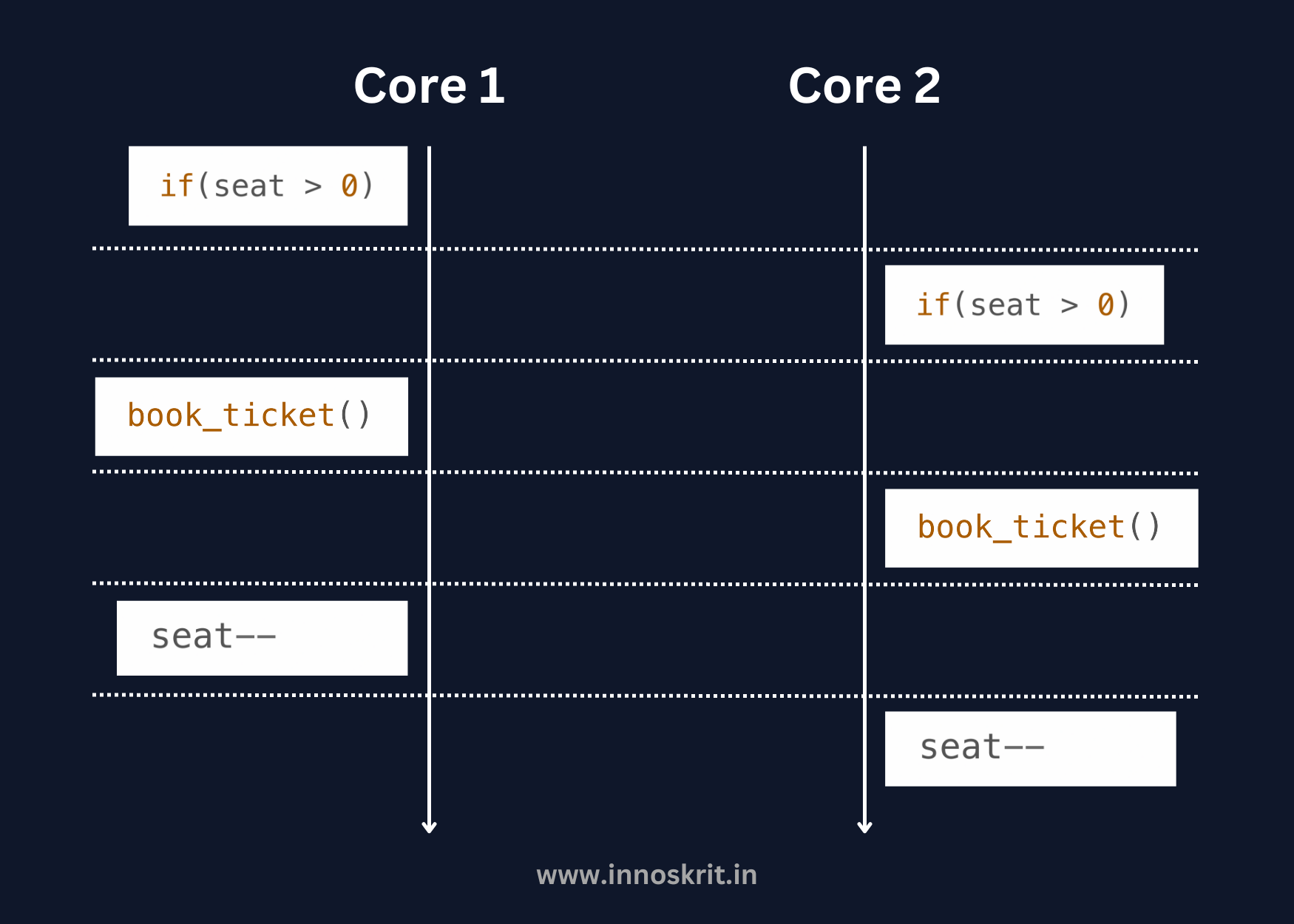

In this case, only one statement is executing at a time. Both threads check the available seats, which are greater than zero. As a result, both the threads book the tickets.

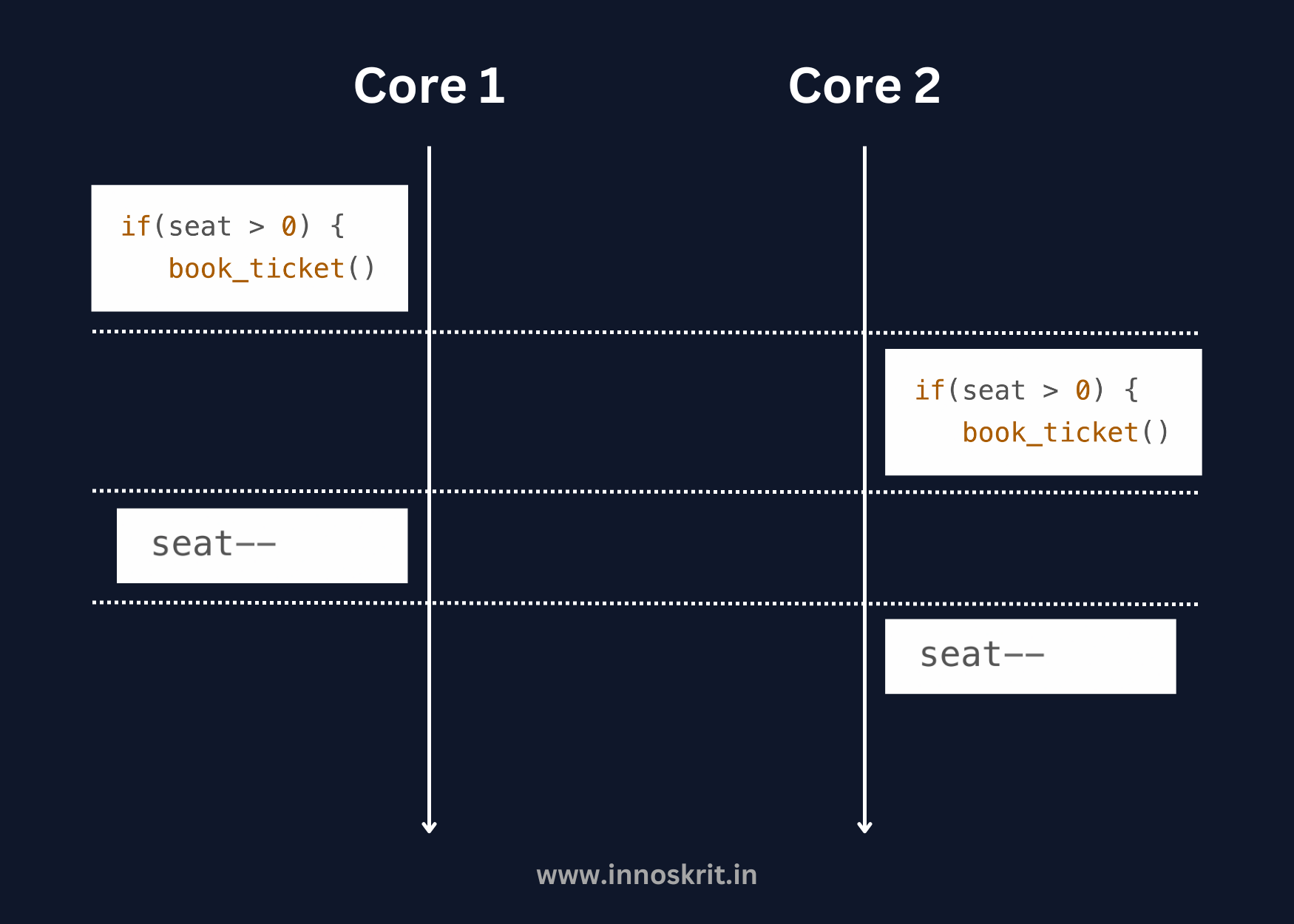

Let's look at the third one. In this scenario, before updating the seats, both threads check their if conditions and both book the seats.

The important thing is that we can’t determine which statement will execute at what time. The same thing can happen with single-core hardware as well.

Concurrency in Golang

Concurrency in Golang involves the use of goroutines, which are a distinctive feature of the language. Unlike some other programming languages that rely on kernel threads for parallelism, Golang introduces goroutines as lightweight, independent entities that can run concurrently.

Key concurrency constructs in Golang include:

- Goroutines: Goroutines are functions executing concurrently with other goroutines in the same thread or set of threads. It runs independently of the function or goroutine from which it starts. Goroutine works in the background at all times and is like the

&in shell scripting. - Channels: A channel is a pipeline for sending and receiving data to communicate between goroutines.

- Select: The select clause chooses one out of several communications. In other words, out of all the ready-to-execute case statements, the clause will randomly select any one of them. If none of the cases is ready to execute, the select clause will look for a default, and if the default isn’t present, it will be blocked.

Parallelism

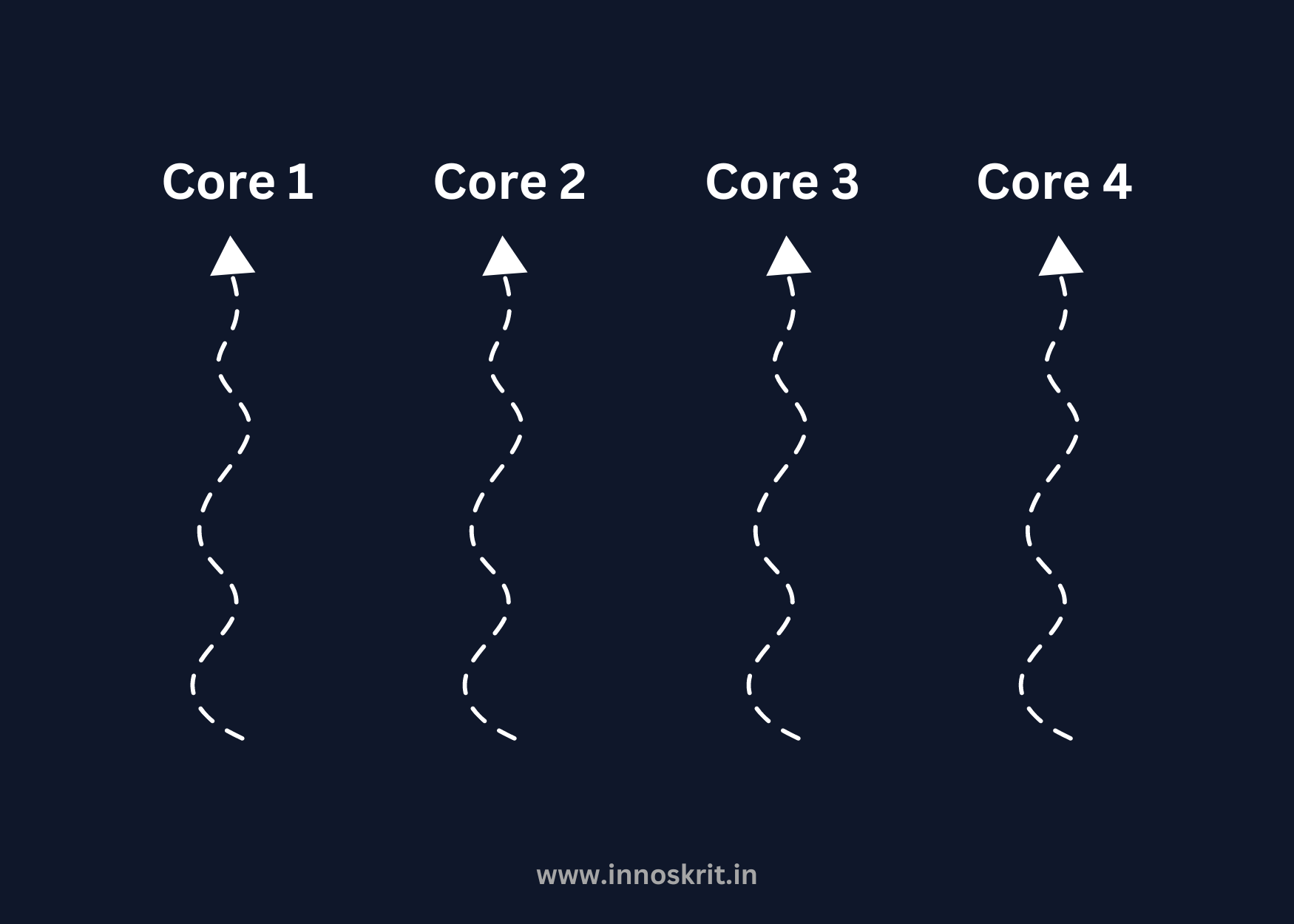

Parallelism is a paradigm that helps to utilize the hardware’s power completely. Let’s understand parallelism in depth. Assume we have a four-core hardware, and we have to do N number of tasks. If we multiplex all the tasks to the same core, we won’t be completely utilizing the hardware because the other three cores will be sitting idle.

The main idea behind parallelism is that we can simultaneously perform multiple tasks in different cores. Thus, if we have to perform N tasks and we have four cores, by using parallelism, we can have four tasks executing simultaneously at any one time.

Concluding Concurrency and Parallelism

As shown above, we have two queues and single coffee machine and each person is trying to access coffee machine. Therefore, to keep running both queues, we will do context switching i.e. hold one queue and allow one person at a time from another queue. That's how concurrency works. Let's look at the parallelism now.

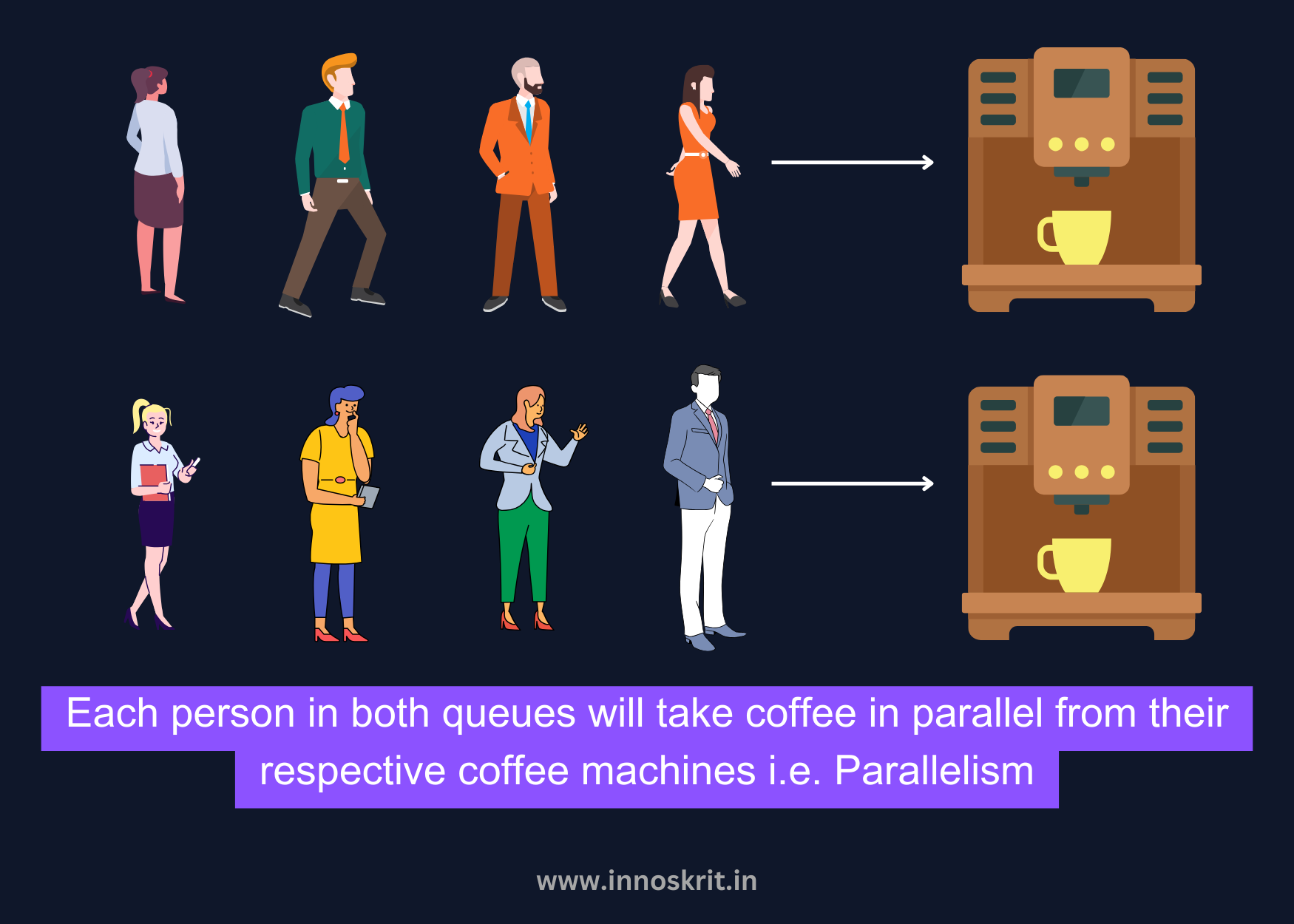

In this example, we can clearly see that we have two coffee machines which represents multi-core system and now we won't need to do context switching.

Two processes are running simultaneously, independent of each other which shows Parallelism.

In the next chapter, we will see Synchronous and Asynchronous Programming with different cores in detail.